Iceberg Table Spec🔗

This is a specification for the Iceberg table format that is designed to manage a large, slow-changing collection of files in a distributed file system or key-value store as a table.

Format Versioning🔗

Versions 1, 2 and 3 of the Iceberg spec are complete and adopted by the community.

Version 4 is under active development and has not been formally adopted.

The format version number is incremented when new features are added that will break forward-compatibility---that is, when older readers would not read newer table features correctly. Tables may continue to be written with an older version of the spec to ensure compatibility by not using features that are not yet implemented by processing engines.

Version 1: Analytic Data Tables🔗

Version 1 of the Iceberg spec defines how to manage large analytic tables using immutable file formats: Parquet, Avro, and ORC.

All version 1 data and metadata files are valid after upgrading a table to version 2. Appendix E documents how to default version 2 fields when reading version 1 metadata.

Version 2: Row-level Deletes🔗

Version 2 of the Iceberg spec adds row-level updates and deletes for analytic tables with immutable files.

The primary change in version 2 adds delete files to encode rows that are deleted in existing data files. This version can be used to delete or replace individual rows in immutable data files without rewriting the files.

In addition to row-level deletes, version 2 makes some requirements stricter for writers. The full set of changes are listed in Appendix E.

Version 3: Extended Types and Capabilities🔗

Version 3 of the Iceberg spec extends data types and existing metadata structures to add new capabilities:

- New data types: nanosecond timestamp(tz), unknown, variant, geometry, geography

- Default value support for columns

- Multi-argument transforms for partitioning and sorting

- Row Lineage tracking

- Binary deletion vectors

- Table encryption keys

The full set of changes are listed in Appendix E.

Goals🔗

- Serializable isolation -- Reads will be isolated from concurrent writes and always use a committed snapshot of a table’s data. Writes will support removing and adding files in a single operation and are never partially visible. Readers will not acquire locks.

- Speed -- Operations will use O(1) remote calls to plan the files for a scan and not O(n) where n grows with the size of the table, like the number of partitions or files.

- Scale -- Job planning will be handled primarily by clients and not bottleneck on a central metadata store. Metadata will include information needed for cost-based optimization.

- Evolution -- Tables will support full schema and partition spec evolution. Schema evolution supports safe column add, drop, reorder and rename, including in nested structures.

- Dependable types -- Tables will provide well-defined and dependable support for a core set of types.

- Storage separation -- Partitioning will be table configuration. Reads will be planned using predicates on data values, not partition values. Tables will support evolving partition schemes.

- Formats -- Underlying data file formats will support identical schema evolution rules and types. Both read-optimized and write-optimized formats will be available.

Overview🔗

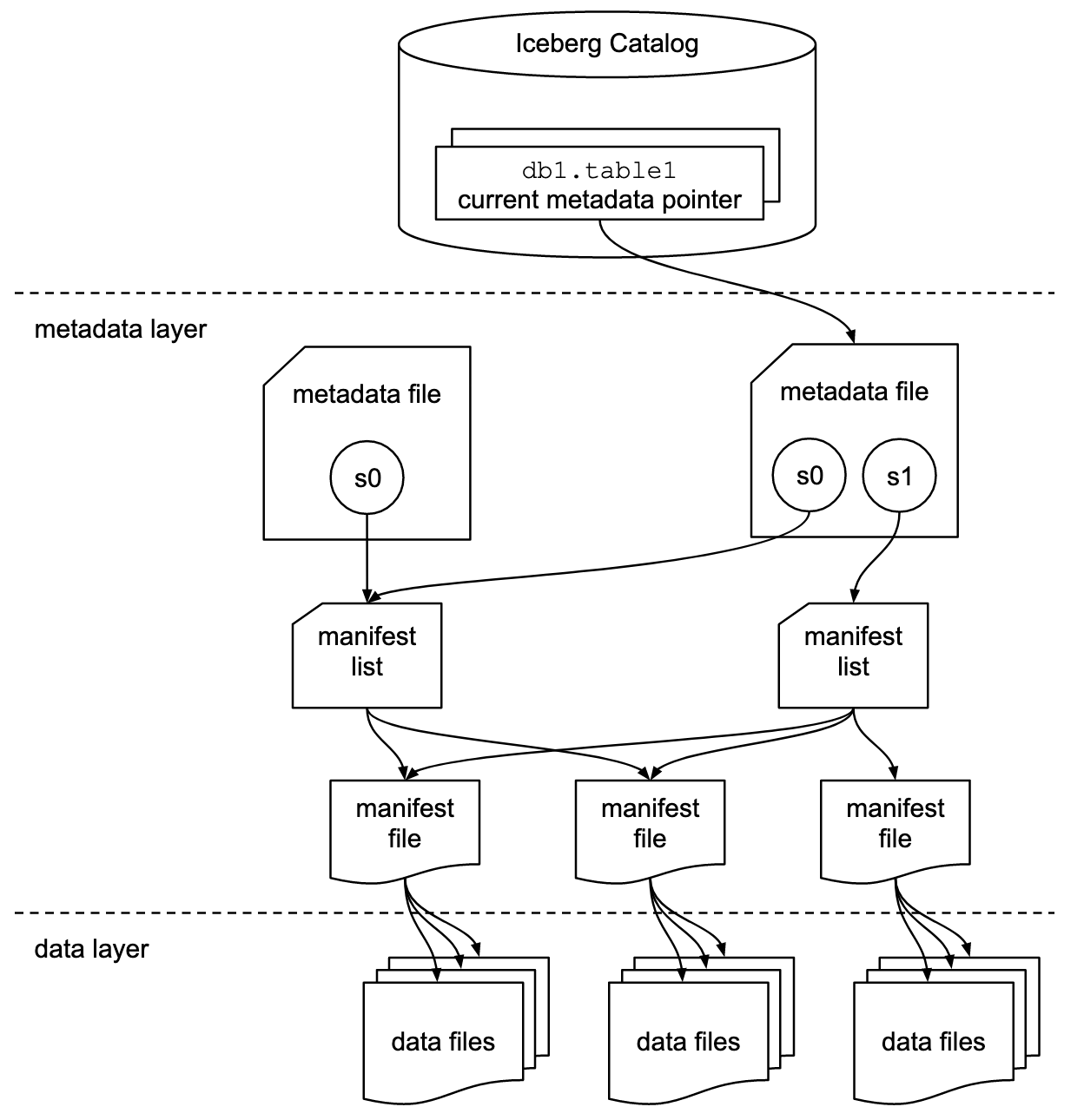

This table format tracks individual data files in a table instead of directories. This allows writers to create data files in-place and only adds files to the table in an explicit commit.

Table state is maintained in metadata files. All changes to table state create a new metadata file and replace the old metadata with an atomic swap. The table metadata file tracks the table schema, partitioning config, custom properties, and snapshots of the table contents. A snapshot represents the state of a table at some time and is used to access the complete set of data files in the table.

Data files in snapshots are tracked by one or more manifest files that contain a row for each data file in the table, the file's partition data, and its metrics. The data in a snapshot is the union of all files in its manifests. Manifest files are reused across snapshots to avoid rewriting metadata that is slow-changing. Manifests can track data files with any subset of a table and are not associated with partitions.

The manifests that make up a snapshot are stored in a manifest list file. Each manifest list stores metadata about manifests, including partition stats and data file counts. These stats are used to avoid reading manifests that are not required for an operation.

Optimistic Concurrency🔗

An atomic swap of one table metadata file for another provides the basis for serializable isolation. Readers use the snapshot that was current when they load the table metadata and are not affected by changes until they refresh and pick up a new metadata location.

Writers create table metadata files optimistically, assuming that the current version will not be changed before the writer's commit. Once a writer has created an update, it commits by swapping the table’s metadata file pointer from the base version to the new version.

If the snapshot on which an update is based is no longer current, the writer must retry the update based on the new current version. Some operations support retry by re-applying metadata changes and committing, under well-defined conditions. For example, a change that rewrites files can be applied to a new table snapshot if all of the rewritten files are still in the table.

The conditions required by a write to successfully commit determines the isolation level. Writers can select what to validate and can make different isolation guarantees.

Sequence Numbers🔗

The relative age of data and delete files relies on a sequence number that is assigned to every successful commit. When a snapshot is created for a commit, it is optimistically assigned the next sequence number, and it is written into the snapshot's metadata. If the commit fails and must be retried, the sequence number is reassigned and written into new snapshot metadata.

All manifests, data files, and delete files created for a snapshot inherit the snapshot's sequence number. Manifest file metadata in the manifest list stores a manifest's sequence number. New data and metadata file entries are written with null in place of a sequence number, which is replaced with the manifest's sequence number at read time. When a data or delete file is written to a new manifest (as "existing"), the inherited sequence number is written to ensure it does not change after it is first inherited.

Inheriting the sequence number from manifest metadata allows writing a new manifest once and reusing it in commit retries. To change a sequence number for a retry, only the manifest list must be rewritten -- which would be rewritten anyway with the latest set of manifests.

Row-level Deletes🔗

Row-level deletes are stored in delete files.

There are two types of row-level deletes:

-

Position deletes -- Mark a row deleted by data file path and the row position in the data file. Position deletes are encoded in a position delete file (V2) or deletion vector (V3 or above).

-

Equality deletes -- Mark a row deleted by one or more column values, like id = 5. Equality deletes are encoded in equality delete file.

Like data files, delete files are tracked by partition. In general, a delete file must be applied to older data files with the same partition; see Scan Planning for details. Column metrics can be used to determine whether a delete file's rows overlap the contents of a data file or a scan range.

File System Operations🔗

Iceberg only requires that file systems support the following operations:

- In-place write -- Files are not moved or altered once they are written.

- Seekable reads -- Data file formats require seek support.

- Deletes -- Tables delete files that are no longer used.

These requirements are compatible with object stores, like S3.

Tables do not require random-access writes. Once written, data and metadata files are immutable until they are deleted.

Tables do not require rename, except for tables that use atomic rename to implement the commit operation for new metadata files.

Specification🔗

Terms🔗

- Schema -- Names and types of fields in a table.

- Partition spec -- A definition of how partition values are derived from data fields.

- Snapshot -- The state of a table at some point in time, including the set of all data files.

- Manifest list -- A file that lists manifest files; one per snapshot.

- Manifest -- A file that lists data or delete files; a subset of a snapshot.

- Data file -- A file that contains rows of a table.

- Delete file -- A file that encodes rows of a table that are deleted by position or data values.

Writer requirements🔗

Some tables in this spec have columns that specify requirements for tables by version. These requirements are intended for writers when adding metadata files (including manifests files and manifest lists) to a table with the given version.

| Requirement | Write behavior |

|---|---|

| (blank) | The field should be omitted |

| optional | The field can be written or omitted |

| required | The field must be written |

Readers should be more permissive because v1 metadata files are allowed in v2 tables (or later) so that tables can be upgraded to without rewriting the metadata tree. For manifest list and manifest files, this table shows the expected read behavior for later versions:

| v1 | v2 | v2+ read behavior |

|---|---|---|

| optional | Read the field as optional | |

| required | Read the field as optional; it may be missing in v1 files | |

| optional | Ignore the field | |

| optional | optional | Read the field as optional |

| optional | required | Read the field as optional; it may be missing in v1 files |

| required | Ignore the field | |

| required | optional | Read the field as optional |

| required | required | Fill in a default or throw an exception if the field is missing |

If a later version is not shown, the requirement for a version is not changed from the most recent version shown. For example, v3 uses the same requirements as v2 if a table shows only v1 and v2 requirements.

Readers may be more strict for metadata JSON files because the JSON files are not reused and will always match the table version. Required fields that were not present in or were optional in prior versions may be handled as required fields. For example, a v2 table that is missing last-sequence-number can throw an exception.

Writing data files🔗

All columns must be written to data files even if they introduce redundancy with metadata stored in manifest files (e.g. columns with identity partition transforms). Writing all columns provides a backup in case of corruption or bugs in the metadata layer.

Writers are not allowed to commit files with a partition spec that contains a field with an unknown transform.

Schemas and Data Types🔗

A table's schema is a list of named columns. All data types are either primitives or nested types, which are maps, lists, or structs. A table schema is also a struct type.

For the representations of these types in Avro, ORC, and Parquet file formats, see Appendix A.

Nested Types🔗

A struct is a tuple of typed values. Each field in the tuple is named and has an integer id that is unique in the table schema. Each field can be either optional or required, meaning that values can (or cannot) be null. Fields may be any type. Fields may have an optional comment or doc string. Fields can have default values.

A list is a collection of values with some element type. The element field has an integer id that is unique in the table schema. Elements can be either optional or required. Element types may be any type.

A map is a collection of key-value pairs with a key type and a value type. Both the key field and value field each have an integer id that is unique in the table schema. Map keys are required and map values can be either optional or required. Both map keys and map values may be any type, including nested types.

Semi-structured Types🔗

A variant is a value that stores semi-structured data. The structure and data types in a variant are not necessarily consistent across rows in a table or data file. The variant type and binary encoding are defined in the Parquet project, with support currently available for V1. Support for Variant is added in Iceberg v3.

Variants are similar to JSON with a wider set of primitive values including date, timestamp, timestamptz, binary, and decimals.

Variant values may contain nested types:

- An array is an ordered collection of variant values.

- An object is a collection of fields that are a string key and a variant value.

As a semi-structured type, there are important differences between variant and Iceberg's other types:

- Variant arrays are similar to lists, but may contain any variant value rather than a fixed element type.

- Variant objects are similar to structs, but may contain variable fields identified by name and field values may be any variant value rather than a fixed field type.

Primitive Types🔗

Supported primitive types are defined in the table below. Primitive types added after v1 have an "added by" version that is the first spec version in which the type is allowed. For example, nanosecond-precision timestamps are part of the v3 spec; using v3 types in v1 or v2 tables can break forward compatibility.

| Added by version | Primitive type | Description | Requirements |

|---|---|---|---|

| v3 | unknown |

Default / null column type used when a more specific type is not known | Must be optional with null defaults; not stored in data files |

boolean |

True or false | ||

int |

32-bit signed integers | Can promote to long |

|

long |

64-bit signed integers | ||

float |

32-bit IEEE 754 floating point | Can promote to double | |

double |

64-bit IEEE 754 floating point | ||

decimal(P,S) |

Fixed-point decimal; precision P, scale S | Scale is fixed, precision must be 38 or less | |

date |

Calendar date without timezone or time | ||

time |

Time of day, microsecond precision, without date, timezone | ||

timestamp |

Timestamp, microsecond precision, without timezone | [1] | |

timestamptz |

Timestamp, microsecond precision, with timezone | [2] | |

| v3 | timestamp_ns |

Timestamp, nanosecond precision, without timezone | [1] |

| v3 | timestamptz_ns |

Timestamp, nanosecond precision, with timezone | [2] |

string |

Arbitrary-length character sequences | Encoded with UTF-8 [3] | |

uuid |

Universally unique identifiers | Should use 16-byte fixed | |

fixed(L) |

Fixed-length byte array of length L | ||

binary |

Arbitrary-length byte array | ||

| v3 | geometry(C) |

Geospatial features from OGC – Simple feature access. Edge-interpolation is always linear/planar. See Appendix G. Parameterized by CRS C. If not specified, C is OGC:CRS84. |

|

| v3 | geography(C, A) |

Geospatial features from OGC – Simple feature access. See Appendix G. Parameterized by CRS C and edge-interpolation algorithm A. If not specified, C is OGC:CRS84 and A is spherical. |

Notes:

- Timestamp values without time zone represent a date and time of day regardless of zone: the time value is independent of zone adjustments (

2017-11-16 17:10:34is always retrieved as2017-11-16 17:10:34). - Timestamp values with time zone represent a point in time: values are stored as UTC and do not retain a source time zone (

2017-11-16 17:10:34 PSTis stored/retrieved as2017-11-17 01:10:34 UTCand these values are considered identical). - Character strings must be stored as UTF-8 encoded byte arrays.

For details on how to serialize a schema to JSON, see Appendix C.

CRS🔗

For geometry and geography types, the parameter C refers to the CRS (coordinate reference system), a mapping of how coordinates refer to locations on Earth.

For geometry type, the CRS does not affect geometric calculations, which are always Cartesian.

The default CRS value OGC:CRS84 means that the objects must be stored in longitude, latitude based on the WGS84 datum.

Custom CRS values can be specified by a string of the format type:identifier, where type is one of the following values:

srid: Spatial reference identifier,identifieris the SRID itself.projjson: PROJJSON,identifieris the name of a table property where the projjson string is stored.

For geography types, the custom CRS must be geographic, with longitudes bound by [-180, 180] and latitudes bound by [-90, 90].

Edge-Interpolation Algorithm🔗

For geography types, an additional parameter A specifies an algorithm for interpolating edges, and is one of the following values:

spherical: edges are interpolated as geodesics on a sphere.vincenty: https://en.wikipedia.org/wiki/Vincenty%27s_formulaethomas: Thomas, Paul D. Spheroidal geodesics, reference systems, & local geometry. US Naval Oceanographic Office, 1970.andoyer: Thomas, Paul D. Mathematical models for navigation systems. US Naval Oceanographic Office, 1965.karney: Karney, Charles FF. "Algorithms for geodesics." Journal of Geodesy 87 (2013): 43-55, and GeographicLib

Default values🔗

Default values can be tracked for struct fields (both nested structs and the top-level schema's struct). There can be two defaults with a field:

initial-defaultis used to populate the field's value for all records that were written before the field was added to the schemawrite-defaultis used to populate the field's value for any records written after the field was added to the schema, if the writer does not supply the field's value

The initial-default is set only when a field is added to an existing schema. The write-default is initially set to the same value as initial-default and can be changed through schema evolution. If either default is not set for an optional field, then the default value is null for compatibility with older spec versions.

The initial-default and write-default produce SQL default value behavior, without rewriting data files. SQL default value behavior when a field is added handles all existing rows as though the rows were written with the new field's default value. Default value changes may only affect future records and all known fields are written into data files. Omitting a known field when writing a data file is never allowed. The write default for a field must be written if a field is not supplied to a write. If the write default for a required field is not set, the writer must fail.

All columns of unknown, variant, geometry, and geography types must default to null. Non-null values for initial-default or write-default are invalid.

Default values for the fields of a struct are tracked as initial-default and write-default at the field level. Default values for fields that are nested structs must not contain default values for the struct's fields (sub-fields). Sub-field defaults are tracked in sub-field's metadata. As a result, the default stored for a nested struct may be either null or a non-null struct with no field values. The effective default value is produced by setting each fields' default in a new struct.

For example, a struct column point with fields x (default 0) and y (default 0) can be defaulted to {"x": 0, "y": 0} or null. A non-null default is stored by setting initial-default or write-default to an empty struct ({}) that will use field values set from each field's initial-default or write-default, respectively.

point default |

point.x default |

point.y default |

Data value | Result value |

|---|---|---|---|---|

null |

0 |

0 |

(missing) | null |

null |

0 |

0 |

{"x": 3} |

{"x": 3, "y": 0} |

{} |

0 |

0 |

(missing) | {"x": 0, "y": 0} |

{} |

0 |

0 |

{"y": -1} |

{"x": 0, "y": -1} |

Default values are attributes of fields in schemas and serialized with fields in the JSON format. See Appendix C.

Schema Evolution🔗

Schemas may be evolved by type promotion or adding, deleting, renaming, or reordering fields in structs (both nested structs and the top-level schema’s struct).

Evolution applies changes to the table's current schema to produce a new schema that is identified by a unique schema ID, is added to the table's list of schemas, and is set as the table's current schema.

Valid primitive type promotions are:

| Primitive type | v1, v2 valid type promotions | v3+ valid type promotions | Requirements |

|---|---|---|---|

unknown |

any type | ||

int |

long |

long |

|

date |

timestamp, timestamp_ns |

Promotion to timestamptz or timestamptz_ns is not allowed; values outside the promoted type's range must result in a runtime failure |

|

float |

double |

double |

|

decimal(P, S) |

decimal(P', S) if P' > P |

decimal(P', S) if P' > P |

Widen precision only |

Iceberg's Avro manifest format does not store the type of lower and upper bounds, and type promotion does not rewrite existing bounds. For example, when a float is promoted to double, existing data file bounds are encoded as 4 little-endian bytes rather than 8 little-endian bytes for double. To correctly decode the value, the original type at the time the file was written must be inferred according to the following table:

| Current type | Length of bounds | Inferred type at write time |

|---|---|---|

long |

4 bytes | int |

long |

8 bytes | long |

double |

4 bytes | float |

double |

8 bytes | double |

timestamp |

4 bytes | date |

timestamp |

8 bytes | timestamp |

timestamp_ns |

4 bytes | date |

timestamp_ns |

8 bytes | timestamp_ns |

decimal(P, S) |

any | decimal(P', S); P' <= P |

Type promotion is not allowed for a field that is referenced by source-id or source-ids of a partition field if the partition transform would produce a different value after promoting the type. For example, bucket[N] produces different hash values for 34 and "34" (2017239379 != -427558391) but the same value for 34 and 34L; when an int field is the source for a bucket partition field, it may be promoted to long but not to string. This may happen for the following type promotion cases:

datetotimestamportimestamp_ns

Any struct, including a top-level schema, can evolve through deleting fields, adding new fields, renaming existing fields, reordering existing fields, or promoting a primitive using the valid type promotions. Adding a new field assigns a new ID for that field and for any nested fields. Renaming an existing field must change the name, but not the field ID. Deleting a field removes it from the current schema. Field deletion cannot be rolled back unless the field was nullable or if the current snapshot has not changed.

Grouping a subset of a struct’s fields into a nested struct is not allowed, nor is moving fields from a nested struct into its immediate parent struct (struct<a, b, c> ↔ struct<a, struct<b, c>>). Evolving primitive types to structs is not allowed, nor is evolving a single-field struct to a primitive (map<string, int> ↔ map<string, struct<int>>).

Struct evolution requires the following rules for default values:

- The

initial-defaultmust be set when a field is added and cannot change - The

write-defaultmust be set when a field is added and may change - When a required field is added, both defaults must be set to a non-null value

- When an optional field is added, the defaults may be null and should be explicitly set

- When a field that is a struct type is added, its default may only be null or a non-null struct with no field values. Default values for fields must be stored in field metadata.

- If a field value is missing from a struct's

initial-default, the field'sinitial-defaultmust be used for the field - If a field value is missing from a struct's

write-default, the field'swrite-defaultmust be used for the field

Column Projection🔗

Columns in Iceberg data files are selected by field id. The table schema's column names and order may change after a data file is written, and projection must be done using field ids.

Values for field ids which are not present in a data file must be resolved according the following rules:

- Return the value from partition metadata if an Identity Transform exists for the field and the partition value is present in the

partitionstruct ondata_fileobject in the manifest. This allows for metadata only migrations of Hive tables. - Use

schema.name-mapping.defaultmetadata to map field id to columns without field id as described below and use the column if it is present. - Return the default value if it has a defined

initial-default(See Default values section for more details). - Return

nullin all other cases.

For example, a file may be written with schema 1: a int, 2: b string, 3: c double and read using projection schema 3: measurement, 2: name, 4: a. This must select file columns c (renamed to measurement), b (now called name), and a column of null values called a; in that order.

Tables may also define a property schema.name-mapping.default with a JSON name mapping containing a list of field mapping objects. These mappings provide fallback field ids to be used when a data file does not contain field id information. Each object should contain

names: A required list of 0 or more names for a field.field-id: An optional Iceberg field ID used when a field's name is present innamesfields: An optional list of field mappings for child field of structs, maps, and lists.

Field mapping fields are constrained by the following rules:

- A name may contain

.but this refers to a literal name, not a nested field. For example,a.brefers to a field nameda.b, not child fieldbof fielda. - Each child field should be defined with their own field mapping under

fields. - Multiple values for

namesmay be mapped to a single field ID to support cases where a field may have different names in different data files. For example, all Avro field aliases should be listed innames. - Fields which exist only in the Iceberg schema and not in imported data files may use an empty

nameslist. - Fields that exist in imported files but not in the Iceberg schema may omit

field-id. - List types should contain a mapping in

fieldsforelement. - Map types should contain mappings in

fieldsforkeyandvalue. - Struct types should contain mappings in

fieldsfor their child fields.

For details on serialization, see Appendix C.

Identifier Field IDs🔗

A schema can optionally track the set of primitive fields that identify rows in a table, using the property identifier-field-ids (see JSON encoding in Appendix C).

Two rows are the "same"---that is, the rows represent the same entity---if the identifier fields are equal. However, uniqueness of rows by this identifier is not guaranteed or required by Iceberg and it is the responsibility of processing engines or data providers to enforce.

Identifier fields may be nested in structs but cannot be nested within maps or lists. Float, double, and optional fields cannot be used as identifier fields and a nested field cannot be used as an identifier field if it is nested in an optional struct, to avoid null values in identifiers.

Reserved Field IDs🔗

Iceberg tables must not use field ids greater than 2147483447 (Integer.MAX_VALUE - 200). This id range is reserved for metadata columns that can be used in user data schemas, like the _file column that holds the file path in which a row was stored.

The set of metadata columns is:

| Field id, name | Type | Description |

|---|---|---|

2147483646 _file |

string |

Path of the file in which a row is stored |

2147483645 _pos |

long |

Ordinal position of a row in the source data file, starting at 0 |

2147483644 _deleted |

boolean |

Whether the row has been deleted |

2147483643 _spec_id |

int |

Spec ID used to track the file containing a row |

2147483642 _partition |

struct |

Partition to which a row belongs |

2147483546 file_path |

string |

Path of a file, used in position-based delete files |

2147483545 pos |

long |

Ordinal position of a row, used in position-based delete files |

2147483544 row |

struct<...> |

Deleted row values, used in position-based delete files |

2147483543 _change_type |

string |

The record type in the changelog (INSERT, DELETE, UPDATE_BEFORE, or UPDATE_AFTER) |

2147483542 _change_ordinal |

int |

The order of the change |

2147483541 _commit_snapshot_id |

long |

The snapshot ID in which the change occurred |

2147483540 _row_id |

long |

A unique long assigned for row lineage, see Row Lineage |

2147483539 _last_updated_sequence_number |

long |

The sequence number which last updated this row, see Row Lineage |

Row Lineage🔗

In v3 and later, an Iceberg table must track row lineage fields for all newly created rows. Engines must maintain the next-row-id table field and the following row-level fields when writing data files:

_row_ida unique long identifier for every row within the table. The value is assigned via inheritance when a row is first added to the table._last_updated_sequence_numberthe sequence number of the commit that last updated a row. The value is inherited when a row is first added or modified.

These fields are assigned and updated by inheritance because the commit sequence number and starting row ID are not assigned until the snapshot is successfully committed. Inheritance is used to allow writing data and manifest files before values are known so that it is not necessary to rewrite data and manifest files when an optimistic commit is retried.

Row lineage does not track lineage for rows updated via Equality Deletes, because engines using equality deletes avoid reading existing data before writing changes and can't provide the original row ID for the new rows. These updates are always treated as if the existing row was completely removed and a unique new row was added.

Row lineage assignment🔗

When a row is added or modified, the _last_updated_sequence_number field is set to null so that it is inherited when reading. Similarly, the _row_id field for an added row is set to null and assigned when reading.

A data file with only new rows for the table may omit the _last_updated_sequence_number and _row_id. If the columns are missing, readers should treat both columns as if they exist and are set to null for all rows.

On read, if _last_updated_sequence_number is null it is assigned the sequence_number of the data file's manifest entry. The data sequence number of a data file is documented in Sequence Number Inheritance.

When null, a row's _row_id field is assigned to the first_row_id from its containing data file plus the row position in that data file (_pos). A data file's first_row_id field is assigned using inheritance and is documented in First Row ID Inheritance. A manifest's first_row_id is assigned when writing the manifest list for a snapshot and is documented in First Row ID Assignment. A snapshot's first-row-id is set to the table's next-row-id and is documented in Snapshot Row IDs.

When an existing row is moved to a different data file for any reason, writers should write _row_id and _last_updated_sequence_number according to the following rules:

- The row's existing non-null

_row_idmust be copied into the new data file - If the write has modified the row, the

_last_updated_sequence_numberfield must be set tonull(so that the modification's sequence number replaces the current value) - If the write has not modified the row, the existing non-null

_last_updated_sequence_numbervalue must be copied to the new data file

Engines may model operations as deleting/inserting rows or as modifications to rows that preserve row ids.

Row lineage example🔗

This example demonstrates how _row_id and _last_updated_sequence_number are assigned for a snapshot. This starts with a table with a next-row-id of 1000.

Writing a new append snapshot creates snapshot metadata with first-row-id assigned to the table's next-row-id:

The snapshot's manifest list will contain existing manifests, plus new manifests that are each assigned a first_row_id based on the added_rows_count and existing_rows_count of preceding new manifests:

manifest_path |

added_rows_count |

existing_rows_count |

first_row_id |

|---|---|---|---|

| ... | ... | ... | ... |

| existing | 75 | 0 | 925 |

| added1 | 100 | 25 | 1000 |

| added2 | 0 | 100 | 1125 |

| added3 | 125 | 25 | 1225 |

The existing manifests are written with the first_row_id assigned when the manifests were added to the table.

The first added manifest, added1, is assigned the same first_row_id as the snapshot and each of the remaining added manifests are assigned a first_row_id based on the number of rows in preceding manifests that were assigned a first_row_id.

Note that the second file, added2, changes the first_row_id of the next manifest even though it contains no added data files because any data file without a first_row_id could be assigned one, even if it has existing status. This is optional if the writer knows that existing data files in the manifest have assigned first_row_id values.

Within added1, the first added manifest, each data file's first_row_id follows a similar pattern:

status |

file_path |

record_count |

first_row_id |

|---|---|---|---|

| EXISTING | data1 | 25 | 800 |

| ADDED | data2 | 50 | null (1000) |

| ADDED | data3 | 50 | null (1050) |

The first_row_id of the EXISTING file data1 was already assigned, so the file metadata was copied into manifest added1.

Files data2 and data3 are written with null for first_row_id and are assigned first_row_id at read time based on the manifest's first_row_id and the record_count of previous files without first_row_id in this manifest: (1,000 + 0) and (1,000 + 50).

The snapshot then populates the total number of added-rows based on the sum of all added rows in the manifests: 100 (50 + 50)

When the new snapshot is committed, the table's next-row-id must also be updated (even if the new snapshot is not in the main branch). Because 375 rows were in data files in manifests that were assigned a first_row_id (added1 100+25, added2 0+100, added3 125+25) the new value is 1,000 + 375 = 1,375.

Row Lineage for Upgraded Tables🔗

When a table is upgraded to v3, its next-row-id is initialized to 0 and existing snapshots are not modified (that is, first-row-id remains unset or null). For such snapshots without first-row-id, first_row_id values for data files and data manifests are null, and values for _row_id are read as null for all rows. When first_row_id is null, inherited row ID values are also null.

Snapshots that are created after upgrading to v3 must set the snapshot's first-row-id and assign row IDs to existing and added files in the snapshot. When writing the manifest list, all data manifests must be assigned a first_row_id, which assigns a first_row_id to all data files via inheritance.

Note that:

- Snapshots created before upgrading to v3 do not have row IDs.

- After upgrading, new snapshots in different branches will assign disjoint ID ranges to existing data files, based on the table's

next-row-idwhen the snapshot is committed. For a data file in multiple branches, a writer may write thefirst_row_idfrom another branch or may assign a newfirst_row_idto the data file (to avoid large metadata rewrites). - Existing rows will inherit

_last_updated_sequence_numberfrom their containing data file.

Partitioning🔗

Data files are stored in manifests with a tuple of partition values that are used in scans to filter out files that cannot contain records that match the scan’s filter predicate. Partition values for a data file must be the same for all records stored in the data file. (Manifests store data files from any partition, as long as the partition spec is the same for the data files.)

Tables are configured with a partition spec that defines how to produce a tuple of partition values from a record. A partition spec has a list of fields that consist of:

- A source column id or a list of source column ids from the table’s schema

- A partition field id that is used to identify a partition field and is unique within a partition spec. In v2 table metadata, it is unique across all partition specs.

- A transform that is applied to the source column(s) to produce a partition value

- A partition name

The source columns, selected by ids, must be a primitive type and cannot be contained in a map or list, but may be nested in a struct. For details on how to serialize a partition spec to JSON, see Appendix C.

Partition specs capture the transform from table data to partition values. This is used to transform predicates to partition predicates, in addition to transforming data values. Deriving partition predicates from column predicates on the table data is used to separate the logical queries from physical storage: the partitioning can change and the correct partition filters are always derived from column predicates. This simplifies queries because users don’t have to supply both logical predicates and partition predicates. For more information, see Scan Planning below.

Partition fields that use an unknown transform can be read by ignoring the partition field for the purpose of filtering data files during scan planning. In v1 and v2, readers should ignore fields with unknown transforms while reading; this behavior is required in v3. Writers are not allowed to commit data using a partition spec that contains a field with an unknown transform.

Two partition specs are considered equivalent with each other if they have the same number of fields and for each corresponding field, the fields have the same source column IDs, transform definition and partition name. Writers must not create a new partition spec if there already exists a compatible partition spec defined in the table.

Partition field IDs must be reused if an existing partition spec contains an equivalent field.

Partition Transforms🔗

| Transform name | Description | Source types | Result type |

|---|---|---|---|

identity |

Source value, unmodified | Any except for geometry, geography, and variant |

Source type |

bucket[N] |

Hash of value, mod N (see below) |

int, long, decimal, date, time, timestamp, timestamptz, timestamp_ns, timestamptz_ns, string, uuid, fixed, binary |

int |

truncate[W] |

Value truncated to width W (see below) |

int, long, decimal, string, binary |

Source type |

year |

Extract a date or timestamp year, as years from 1970 | date, timestamp, timestamptz, timestamp_ns, timestamptz_ns |

int |

month |

Extract a date or timestamp month, as months from 1970-01-01 | date, timestamp, timestamptz, timestamp_ns, timestamptz_ns |

int |

day |

Extract a date or timestamp day, as days from 1970-01-01 | date, timestamp, timestamptz, timestamp_ns, timestamptz_ns |

int |

hour |

Extract a timestamp hour, as hours from 1970-01-01 00:00:00 | timestamp, timestamptz, timestamp_ns, timestamptz_ns |

int |

void |

Always produces null |

Any | Source type or int |

All transforms must return null for a null input value.

The void transform may be used to replace the transform in an existing partition field so that the field is effectively dropped in v1 tables. See partition evolution below.

Bucket Transform Details🔗

Bucket partition transforms use a 32-bit hash of the source value. The 32-bit hash implementation is the 32-bit Murmur3 hash, x86 variant, seeded with 0.

Transforms are parameterized by a number of buckets [1], N. The hash mod N must produce a positive value by first discarding the sign bit of the hash value. In pseudo-code, the function is:

Notes:

- Changing the number of buckets as a table grows is possible by evolving the partition spec.

For hash function details by type, see Appendix B.

Truncate Transform Details🔗

| Type | Config | Truncate specification | Examples |

|---|---|---|---|

int |

W, width |

v - (v % W) remainders must be positive [1] |

W=10: 1 → 0, -1 → -10 |

long |

W, width |

v - (v % W) remainders must be positive [1] |

W=10: 1 → 0, -1 → -10 |

decimal |

W, width (no scale) |

scaled_W = decimal(W, scale(v)) v - (v % scaled_W) [1, 2] |

W=50, s=2: 10.65 → 10.50 |

string |

L, length |

Substring of length L: v.substring(0, L) [3] |

L=3: iceberg → ice |

binary |

L, length |

Sub array of length L: v.subarray(0, L) [4] |

L=3: \x01\x02\x03\x04\x05 → \x01\x02\x03 |

Notes:

- The remainder,

v % W, must be positive. For languages where%can produce negative values, the correct truncate function is:v - (((v % W) + W) % W) - The width,

W, used to truncate decimal values is applied using the scale of the decimal column to avoid additional (and potentially conflicting) parameters. - Strings are truncated to a valid UTF-8 string with no more than

Lcode points. - In contrast to strings, binary values do not have an assumed encoding and are truncated to

Lbytes.

Partition Evolution🔗

Table partitioning can be evolved by adding, removing, renaming, or reordering partition spec fields.

Changing a partition spec produces a new spec identified by a unique spec ID that is added to the table's list of partition specs and may be set as the table's default spec.

When evolving a spec, changes should not cause partition field IDs to change because the partition field IDs are used as the partition tuple field IDs in manifest files.

In v2, partition field IDs must be explicitly tracked for each partition field. New IDs are assigned based on the last assigned partition ID in table metadata.

In v1, partition field IDs were not tracked, but were assigned sequentially starting at 1000 in the reference implementation. This assignment caused problems when reading metadata tables based on manifest files from multiple specs because partition fields with the same ID may contain different data types. For compatibility with old versions, the following rules are recommended for partition evolution in v1 tables:

- Do not reorder partition fields

- Do not drop partition fields; instead replace the field's transform with the

voidtransform - Only add partition fields at the end of the previous partition spec

Sorting🔗

Users can sort their data within partitions by columns to gain performance. The information on how the data is sorted can be declared per data or delete file, by a sort order.

A sort order is defined by a sort order id and a list of sort fields. The order of the sort fields within the list defines the order in which the sort is applied to the data. Each sort field consists of:

- A source column id or a list of source column ids from the table's schema

- A transform that is used to produce values to be sorted on from the source column(s). This is the same transform as described in partition transforms.

- A sort direction, that can only be either

ascordesc - A null order that describes the order of null values when sorted. Can only be either

nulls-firstornulls-last

For details on how to serialize a sort order to JSON, see Appendix C.

Order id 0 is reserved for the unsorted order.

Sorting floating-point numbers should produce the following behavior: -NaN < -Infinity < -value < -0 < 0 < value < Infinity < NaN. This aligns with the implementation of Java floating-point types comparisons.

A data or delete file is associated with a sort order by the sort order's id within a manifest. Therefore, the table must declare all the sort orders for lookup. A table could also be configured with a default sort order id, indicating how the new data should be sorted by default. Writers should use this default sort order to sort the data on write, but are not required to if the default order is prohibitively expensive, as it would be for streaming writes.

Manifests🔗

A manifest is an immutable Avro file that lists data files or delete files, along with each file’s partition data tuple, metrics, and tracking information. One or more manifest files are used to store a snapshot, which tracks all of the files in a table at some point in time. Manifests are tracked by a manifest list for each table snapshot.

A manifest is a valid Iceberg data file: files must use valid Iceberg formats, schemas, and column projection.

A manifest may store either data files or delete files, but not both because manifests that contain delete files are scanned first during job planning. Whether a manifest is a data manifest or a delete manifest is stored in manifest metadata.

A manifest stores files for a single partition spec. When a table’s partition spec changes, old files remain in the older manifest and newer files are written to a new manifest. This is required because a manifest file’s schema is based on its partition spec (see below). The partition spec of each manifest is also used to transform predicates on the table's data rows into predicates on partition values that are used during job planning to select files from a manifest.

A manifest file must store the partition spec and other metadata as properties in the Avro file's key-value metadata:

| v1 | v2 | Key | Value |

|---|---|---|---|

| required | required | schema |

JSON representation of the table schema at the time the manifest was written |

| optional | required | schema-id |

ID of the schema used to write the manifest as a string |

| required | required | partition-spec |

JSON representation of only the partition fields array of the partition spec used to write the manifest. See Appendix C |

| optional | required | partition-spec-id |

ID of the partition spec used to write the manifest as a string |

| optional | required | format-version |

Table format version number of the manifest as a string |

| required | content |

Type of content files tracked by the manifest: "data" or "deletes" |

The schema of a manifest file is defined by the manifest_entry struct, described in the following section.

Manifest Entry Fields🔗

The manifest_entry struct consists of the following fields:

| v1 | v2 | Field id, name | Type | Description |

|---|---|---|---|---|

| required | required | 0 status |

int with meaning: 0: EXISTING 1: ADDED 2: DELETED |

Used to track additions and deletions. Deletes are informational only and not used in scans. |

| required | optional | 1 snapshot_id |

long |

Snapshot id where the file was added, or deleted if status is 2. Inherited when null. |

| optional | 3 sequence_number |

long |

Data sequence number of the file. Inherited when null and status is 1 (added). | |

| optional | 4 file_sequence_number |

long |

File sequence number indicating when the file was added. Inherited when null and status is 1 (added). | |

| required | required | 2 data_file |

data_file struct (see below) |

File path, partition tuple, metrics, ... |

The manifest entry fields are used to keep track of the snapshot in which files were added or logically deleted. The data_file struct, defined below, is nested inside the manifest entry so that it can be easily passed to job planning without the manifest entry fields.

When a file is added to the dataset, its manifest entry should store the snapshot ID in which the file was added and set status to 1 (added).

When a file is replaced or deleted from the dataset, its manifest entry fields store the snapshot ID in which the file was deleted and status 2 (deleted). The file may be deleted from the file system when the snapshot in which it was deleted is garbage collected, assuming that older snapshots have also been garbage collected [1].

Iceberg v2 adds data and file sequence numbers to the entry and makes the snapshot ID optional. Values for these fields are inherited from manifest metadata when null. That is, if the field is null for an entry, then the entry must inherit its value from the manifest file's metadata, stored in the manifest list.

The sequence_number field represents the data sequence number and must never change after a file is added to the dataset. The data sequence number represents a relative age of the file content and should be used for planning which delete files apply to a data file.

The file_sequence_number field represents the sequence number of the snapshot that added the file and must also remain unchanged upon assigning at commit. The file sequence number can't be used for pruning delete files as the data within the file may have an older data sequence number.

The data and file sequence numbers are inherited only if the entry status is 1 (added). If the entry status is 0 (existing) or 2 (deleted), the entry must include both sequence numbers explicitly.

Notes:

- Technically, data files can be deleted when the last snapshot that contains the file as “live” data is garbage collected. But this is harder to detect and requires finding the diff of multiple snapshots. It is easier to track what files are deleted in a snapshot and delete them when that snapshot expires. It is not recommended to add a deleted file back to a table. Adding a deleted file can lead to edge cases where incremental deletes can break table snapshots.

- Manifest list files are required in v2, so that the

sequence_numberandsnapshot_idto inherit are always available.

Data File Fields🔗

The data_file struct consists of the following fields:

| v1 | v2 | v3 | Field id, name | Type | Description |

|---|---|---|---|---|---|

| required | required | 134 content |

int with meaning: 0: DATA, 1: POSITION DELETES, 2: EQUALITY DELETES |

Type of content stored by the data file: data, equality deletes, or position deletes (all v1 files are data files) | |

| required | required | required | 100 file_path |

string |

Full URI for the file with FS scheme |

| required | required | required | 101 file_format |

string |

String file format name, avro, orc, parquet, or puffin |

| required | required | required | 102 partition |

struct<...> |

Partition data tuple, schema based on the partition spec output using partition field ids for the struct field ids |

| required | required | required | 103 record_count |

long |

Number of records in this file, or the cardinality of a deletion vector |

| required | required | required | 104 file_size_in_bytes |

long |

Total file size in bytes |

| required | 105 block_size_in_bytes |

long |

Deprecated. Always write a default in v1. Do not write in v2 or v3. | ||

| optional | 106 file_ordinal |

int |

Deprecated. Do not write. | ||

| optional | 107 sort_columns |

list<112: int> |

Deprecated. Do not write. | ||

| optional | optional | optional | 108 column_sizes |

map<117: int, 118: long> |

Map from column id to the total size on disk of all regions that store the column. Does not include bytes necessary to read other columns, like footers. Leave null for row-oriented formats (Avro) |

| optional | optional | optional | 109 value_counts |

map<119: int, 120: long> |

Map from column id to number of values in the column (including null and NaN values) |

| optional | optional | optional | 110 null_value_counts |

map<121: int, 122: long> |

Map from column id to number of null values in the column |

| optional | optional | optional | 137 nan_value_counts |

map<138: int, 139: long> |

Map from column id to number of NaN values in the column |

| optional | optional | 111 distinct_counts |

map<123: int, 124: long> |

Deprecated. Do not write. | |

| optional | optional | optional | 125 lower_bounds |

map<126: int, 127: binary> |

Map from column id to lower bound in the column serialized as binary [1]. Each value must be less than or equal to all non-null, non-NaN values in the column for the file [2] |

| optional | optional | optional | 128 upper_bounds |

map<129: int, 130: binary> |

Map from column id to upper bound in the column serialized as binary [1]. Each value must be greater than or equal to all non-null, non-Nan values in the column for the file [2] |

| optional | optional | optional | 131 key_metadata |

binary |

Implementation-specific key metadata for encryption |

| optional | optional | optional | 132 split_offsets |

list<133: long> |

Split offsets for the data file. For example, all row group offsets in a Parquet file. Must be sorted ascending |

| optional | optional | 135 equality_ids |

list<136: int> |

Field ids used to determine row equality in equality delete files. Required when content=2 and should be null otherwise. Fields with ids listed in this column must be present in the delete file |

|

| optional | optional | optional | 140 sort_order_id |

int |

ID representing sort order for this file [3]. |

| optional | 142 first_row_id |

long |

The _row_id for the first row in the data file. See First Row ID Inheritance |

||

| optional | optional | 143 referenced_data_file |

string |

Fully qualified location (URI with FS scheme) of a data file that all deletes reference [4] | |

| optional | 144 content_offset |

long |

The offset in the file where the content starts [5] | ||

| optional | 145 content_size_in_bytes |

long |

The length of a referenced content stored in the file; required if content_offset is present [5] |

The partition struct stores the tuple of partition values for each file. Its type is derived from the partition fields of the partition spec used to write the manifest file. In v2, the partition struct's field ids must match the ids from the partition spec.

The column metrics maps are used when filtering to select both data and delete files. For delete files, the metrics must store bounds and counts for all deleted rows, or must be omitted. Storing metrics for deleted rows ensures that the values can be used during job planning to find delete files that must be merged during a scan.

Notes:

- Single-value serialization for lower and upper bounds is detailed in Appendix D.

- For

floatanddouble, the value-0.0must precede+0.0, as in the IEEE 754totalOrderpredicate. NaNs are not permitted as lower or upper bounds. - If sort order ID is missing or unknown, then the order is assumed to be unsorted. Only data files and equality delete files should be written with a non-null order id. Position deletes are required to be sorted by file and position, not a table order, and should set sort order id to null. Readers must ignore sort order id for position delete files.

- Position delete metadata can use

referenced_data_filewhen all deletes tracked by the entry are in a single data file. Setting the referenced file is required for deletion vectors. - The

content_offsetandcontent_size_in_bytesfields are used to reference a specific blob for direct access to a deletion vector. For deletion vectors, these values are required and must exactly match theoffsetandlengthstored in the Puffin footer for the deletion vector blob. - The following field ids are reserved on

data_file: 141.

Bounds for Variant, Geometry, and Geography🔗

For Variant, values in the lower_bounds and upper_bounds maps store serialized Variant objects that contain lower or upper bounds respectively. The object keys for the bound-variants are normalized JSON path expressions that uniquely identify a field. The object values are primitive Variant representations of the lower or upper bound for that field. Including bounds for any field is optional and upper and lower bounds must have the same Variant type.

Bounds for a field must be accurate for all non-null values of the field in a data file. Bounds for values within arrays must be accurate for all values in the array. Bounds must not be written to describe values with mixed Variant types (other than null). For example, a measurement field that contains int64 and null values may have bounds, but if the field also contained a string value such as n/a or 0 then the field may not have bounds.

The Variant bounds objects are serialized by concatenating the Variant encoding of the metadata (containing the normalized field paths) and the bounds object.

Field paths follow the JSON path format to use normalized path, such as $['location']['latitude'] or $['user.name']. The special path $ represents bounds for the variant root, indicating that the variant data consists of uniform primitive types, such as strings.

Examples of valid field paths using normalized JSON path format are:

$-- the root of the Variant value$['event_type']-- theevent_typefield in a Variant object$['user.name']-- the"user.name"field in a Variant object$['location']['latitude']-- thelatitudefield nested within alocationobject$['tags']-- thetagsarray$['addresses']['zip']-- thezipfield in anaddressesarray that contains objects

For geometry and geography types, lower_bounds and upper_bounds are both points of the following coordinates X, Y, Z, and M (see Appendix G) which are the lower / upper bound of all objects in the file.

For geography only, xmin (X value of lower_bounds) may be greater than xmax (X value of upper_bounds), in which case an object in this bounding box may match if it contains an X such that x >= xmin OR x <= xmax. In geographic terminology, the concepts of xmin, xmax, ymin, and ymax are also known as westernmost, easternmost, southernmost and northernmost, respectively. These points are further restricted to the canonical ranges of [-180..180] for X and [-90..90] for Y.

When calculating upper and lower bounds for geometry and geography, null or NaN values in a coordinate dimension are skipped; for example, POINT (1 NaN) contributes a value to X but no values to Y, Z, or M dimension bounds. If a dimension has only null or NaN values, that dimension is omitted from the bounding box. If either the X or Y dimension is missing then the bounding box itself is not produced.

Sequence Number Inheritance🔗

Manifests track the sequence number when a data or delete file was added to the table.

When adding a new file, its data and file sequence numbers are set to null because the snapshot's sequence number is not assigned until the snapshot is successfully committed. When reading, sequence numbers are inherited by replacing null with the manifest's sequence number from the manifest list.

It is also possible to add a new file with data that logically belongs to an older sequence number. In that case, the data sequence number must be provided explicitly and not inherited. However, the file sequence number must be always assigned when the snapshot is successfully committed.

When writing an existing file to a new manifest or marking an existing file as deleted, the data and file sequence numbers must be non-null and set to the original values that were either inherited or provided at the commit time.

Inheriting sequence numbers through the metadata tree allows writing a new manifest without a known sequence number, so that a manifest can be written once and reused in commit retries. To change a sequence number for a retry, only the manifest list must be rewritten.

When reading v1 manifests with no sequence number column, sequence numbers for all files must default to 0.

First Row ID Inheritance🔗

When adding a new data file, its first_row_id field is set to null because it is not assigned until the snapshot is successfully committed.

When reading, the first_row_id is assigned by replacing null with the manifest's first_row_id plus the sum of record_count for all data files that preceded the file in the manifest that also had a null first_row_id.

The inherited value of first_row_id must be written into data file metadata when creating existing and deleted entries. The value of first_row_id for delete files is always null.

Any null (unassigned) first_row_id must be assigned via inheritance, even if the data file is existing. This ensures that row IDs are assigned to existing data files in upgraded tables in the first commit after upgrading to v3.

Snapshots🔗

A snapshot consists of the following fields:

| v1 | v2 | v3 | Field | Description |

|---|---|---|---|---|

| required | required | required | snapshot-id |

A unique long ID |

| optional | optional | optional | parent-snapshot-id |

The snapshot ID of the snapshot's parent. Omitted for any snapshot with no parent |

| required | required | sequence-number |

A monotonically increasing long that tracks the order of changes to a table | |

| required | required | required | timestamp-ms |

A timestamp when the snapshot was created, used for garbage collection and table inspection |

| optional | required | required | manifest-list |

The location of a manifest list for this snapshot that tracks manifest files with additional metadata |

| optional | manifests |

A list of manifest file locations. Must be omitted if manifest-list is present |

||

| optional | required | required | summary |

A string map that summarizes the snapshot changes, including operation as a required field (see below) |

| optional | optional | optional | schema-id |

ID of the table's current schema when the snapshot was created |

| required | first-row-id |

The first _row_id assigned to the first row in the first data file in the first manifest, see Row Lineage |

||

| required | added-rows |

The upper bound of the number of rows with assigned row IDs, see Row Lineage | ||

| optional | key-id |

ID of the encryption key that encrypts the manifest list key metadata |

The snapshot summary's operation field is used by some operations, like snapshot expiration, to skip processing certain snapshots. Possible operation values are:

append-- Only data files were added and no files were removed.replace-- Data and delete files were added and removed without changing table data; i.e., compaction, changing the data file format, or relocating data files.overwrite-- Data and delete files were added and removed in a logical overwrite operation.delete-- Data files were removed and their contents logically deleted and/or delete files were added to delete rows.

For other optional snapshot summary fields, see Appendix F.

Data and delete files for a snapshot can be stored in more than one manifest. This enables:

- Appends can add a new manifest to minimize the amount of data written, instead of adding new records by rewriting and appending to an existing manifest. (This is called a “fast append”.)

- Tables can use multiple partition specs. A table’s partition configuration can evolve if, for example, its data volume changes. Each manifest uses a single partition spec, and queries do not need to change because partition filters are derived from data predicates.

- Large tables can be split across multiple manifests so that implementations can parallelize job planning or reduce the cost of rewriting a manifest.

Manifests for a snapshot are tracked by a manifest list.

Valid snapshots are stored as a list in table metadata. For serialization, see Appendix C.

Snapshot Row IDs🔗

A snapshot's first-row-id is assigned to the table's current next-row-id on each commit attempt. If a commit is retried, the first-row-id must be reassigned based on the table's current next-row-id. The first-row-id field is required even if a commit does not assign any ID space.

The snapshot's first-row-id is the starting first_row_id assigned to manifests in the snapshot's manifest list.

The snapshot's added-rows captures the upper bound of the number of rows with assigned row IDs.

It can be used safely to increment the table's next-row-id during a commit.

It can be more than the number of rows added in this snapshot and include some existing rows,

see Row Lineage Example.

Manifest Lists🔗

Snapshots are embedded in table metadata, but the list of manifests for a snapshot are stored in a separate manifest list file.

A new manifest list is written for each attempt to commit a snapshot because the list of manifests always changes to produce a new snapshot. When a manifest list is written, the (optimistic) sequence number of the snapshot is written for all new manifest files tracked by the list.

A manifest list includes summary metadata that can be used to avoid scanning all of the manifests in a snapshot when planning a table scan. This includes the number of added, existing, and deleted files, and a summary of values for each field of the partition spec used to write the manifest.

A manifest list is a valid Iceberg data file: files must use valid Iceberg formats, schemas, and column projection.

Manifest list files store manifest_file, a struct with the following fields:

| v1 | v2 | v3 | Field id, name | Type | Description |

|---|---|---|---|---|---|

| required | required | required | 500 manifest_path |

string |

Location of the manifest file |

| required | required | required | 501 manifest_length |

long |

Length of the manifest file in bytes |

| required | required | required | 502 partition_spec_id |

int |

ID of a partition spec used to write the manifest; must be listed in table metadata partition-specs |

| required | required | 517 content |

int with meaning: 0: data, 1: deletes |

The type of files tracked by the manifest, either data or delete files; 0 for all v1 manifests | |

| required | required | 515 sequence_number |

long |

The sequence number when the manifest was added to the table; use 0 when reading v1 manifest lists | |

| required | required | 516 min_sequence_number |

long |

The minimum data sequence number of all live data or delete files in the manifest; use 0 when reading v1 manifest lists | |

| required | required | required | 503 added_snapshot_id |

long |

ID of the snapshot where the manifest file was added |

| optional | required | required | 504 added_files_count |

int |

Number of entries in the manifest that have status ADDED (1), when null this is assumed to be non-zero |

| optional | required | required | 505 existing_files_count |

int |

Number of entries in the manifest that have status EXISTING (0), when null this is assumed to be non-zero |

| optional | required | required | 506 deleted_files_count |

int |

Number of entries in the manifest that have status DELETED (2), when null this is assumed to be non-zero |

| optional | required | required | 512 added_rows_count |

long |

Number of rows in all of files in the manifest that have status ADDED, when null this is assumed to be non-zero |

| optional | required | required | 513 existing_rows_count |

long |

Number of rows in all of files in the manifest that have status EXISTING, when null this is assumed to be non-zero |

| optional | required | required | 514 deleted_rows_count |

long |

Number of rows in all of files in the manifest that have status DELETED, when null this is assumed to be non-zero |

| optional | optional | optional | 507 partitions |

list<508: field_summary> (see below) |

A list of field summaries for each partition field in the spec. Each field in the list corresponds to a field in the manifest file’s partition spec. |

| optional | optional | optional | 519 key_metadata |

binary |

Implementation-specific key metadata for encryption |

| optional | 520 first_row_id |

long |

The starting _row_id to assign to rows added by ADDED data files First Row ID Assignment |

field_summary is a struct with the following fields:

| v1 | v2 | Field id, name | Type | Description |

|---|---|---|---|---|

| required | required | 509 contains_null |

boolean |

Whether the manifest contains at least one partition with a null value for the field |

| optional | optional | 518 contains_nan |

boolean |

Whether the manifest contains at least one partition with a NaN value for the field |

| optional | optional | 510 lower_bound |

bytes [1] |

Lower bound for the non-null, non-NaN values in the partition field, or null if all values are null or NaN [2] |

| optional | optional | 511 upper_bound |

bytes [1] |

Upper bound for the non-null, non-NaN values in the partition field, or null if all values are null or NaN [2] |

Notes:

- Lower and upper bounds are serialized to bytes using the single-object serialization in Appendix D. The type of used to encode the value is the type of the partition field data.

- If -0.0 is a value of the partition field, the

lower_boundmust not be +0.0, and if +0.0 is a value of the partition field, theupper_boundmust not be -0.0.

First Row ID Assignment🔗

The first_row_id for existing manifests must be preserved when writing a new manifest list. The value of first_row_id for delete manifests is always null. The first_row_id is only assigned for data manifests that do not have a first_row_id. Assignment must account for data files that will be assigned first_row_id values when the manifest is read.

The first manifest without a first_row_id is assigned a value that is greater than or equal to the first_row_id of the snapshot. Subsequent manifests without a first_row_id are assigned one based on the previous manifest to be assigned a first_row_id. Each assigned first_row_id must increase by the row count of all files that will be assigned a first_row_id via inheritance in the last assigned manifest. That is, each first_row_id must be greater than or equal to the last assigned first_row_id plus the total record count of data files with a null first_row_id in the last assigned manifest.

A simple and valid approach is to estimate the number of rows in data files that will be assigned a first_row_id using the manifest's added_rows_count and existing_rows_count: first_row_id = last_assigned.first_row_id + last_assigned.added_rows_count + last_assigned.existing_rows_count.

Scan Planning🔗

Scans are planned by reading the manifest files for the current snapshot. Deleted entries in data and delete manifests (those marked with status "DELETED") are not used in a scan.

Manifests that contain no matching files, determined using either file counts or partition summaries, may be skipped.

For each manifest, scan predicates, which filter data rows, are converted to partition predicates, which filter partition tuples. These partition predicates are used to select relevant data files, delete files, and deletion vector metadata. Conversion uses the partition spec that was used to write the manifest file regardless of the current partition spec.

Scan predicates are converted to partition predicates using an inclusive projection: if a scan predicate matches a row, then the partition predicate must match that row’s partition. This is called inclusive [1] because rows that do not match the scan predicate may be included in the scan by the partition predicate.

For example, an events table with a timestamp column named ts that is partitioned by ts_day=day(ts) is queried by users with ranges over the timestamp column: ts > X. The inclusive projection is ts_day >= day(X), which is used to select files that may have matching rows. Note that, in most cases, timestamps just before X will be included in the scan because the file contains rows that match the predicate and rows that do not match the predicate.

The inclusive projection for an unknown partition transform is true because the partition field is ignored and not used in filtering.

Scan predicates are also used to filter data and delete files using column bounds and counts that are stored by field id in manifests. The same filter logic can be used for both data and delete files because both store metrics of the rows either inserted or deleted. If metrics show that a delete file has no rows that match a scan predicate, it may be ignored just as a data file would be ignored [2].

Data files that match the query filter must be read by the scan.

Note that for any snapshot, all file paths marked with "ADDED" or "EXISTING" may appear at most once across all manifest files in the snapshot. If a file path appears more than once, the results of the scan are undefined. Reader implementations may raise an error in this case, but are not required to do so.

Delete files and deletion vector metadata that match the filters must be applied to data files at read time, limited by the following scope rules.

- A deletion vector must be applied to a data file when all of the following are true:

- The data file's

file_pathis equal to the deletion vector'sreferenced_data_file - The data file's data sequence number is less than or equal to the deletion vector's data sequence number

- The data file's partition (both spec and partition values) is equal [4] to the deletion vector's partition

- The data file's

- A position delete file must be applied to a data file when all of the following are true:

- The data file's

file_pathis equal to the delete file'sreferenced_data_fileif it is non-null - The data file's data sequence number is less than or equal to the delete file's data sequence number

- The data file's partition (both spec and partition values) is equal [4] to the delete file's partition

- There is no deletion vector that must be applied to the data file (when added, such a vector must contain all deletes from existing position delete files)

- The data file's

- An equality delete file must be applied to a data file when all of the following are true:

- The data file's data sequence number is strictly less than the delete's data sequence number

- The data file's partition (both spec id and partition values) is equal [4] to the delete file's partition or the delete file's partition spec is unpartitioned

In general, deletes are applied only to data files that are older and in the same partition, except for two special cases:

- Equality delete files stored with an unpartitioned spec are applied as global deletes. Otherwise, delete files do not apply to files in other partitions.

- Position deletes (vectors and files) must be applied to data files from the same commit, when the data and delete file data sequence numbers are equal. This allows deleting rows that were added in the same commit.

Notes:

- An alternative, strict projection, creates a partition predicate that will match a file if all of the rows in the file must match the scan predicate. These projections are used to calculate the residual predicates for each file in a scan.

- For example, if

file_ahas rows withidbetween 1 and 10 and a delete file contains rows withidbetween 1 and 4, a scan forid = 9may ignore the delete file because none of the deletes can match a row that will be selected. - Floating point partition values are considered equal if their IEEE 754 floating-point "single format" bit layout are equal with NaNs normalized to have only the most significant mantissa bit set (the equivalent of calling

Float.floatToIntBitsorDouble.doubleToLongBitsin Java). The Avro specification requires all floating point values to be encoded in this format. - Unknown partition transforms do not affect partition equality. Although partition fields with unknown transforms are ignored for filtering, the result of an unknown transform is still used when testing whether partition values are equal.

Snapshot References🔗

Iceberg tables keep track of branches and tags using snapshot references. Tags are labels for individual snapshots. Branches are mutable named references that can be updated by committing a new snapshot as the branch's referenced snapshot using the Commit Conflict Resolution and Retry procedures.

The snapshot reference object records all the information of a reference including snapshot ID, reference type and Snapshot Retention Policy.

| v1 | v2 | Field name | Type | Description |

|---|---|---|---|---|

| required | required | snapshot-id |

long |

A reference's snapshot ID. The tagged snapshot or latest snapshot of a branch. |

| required | required | type |

string |

Type of the reference, tag or branch |

| optional | optional | min-snapshots-to-keep |

int |

For branch type only, a positive number for the minimum number of snapshots to keep in a branch while expiring snapshots. Defaults to table property history.expire.min-snapshots-to-keep. |

| optional | optional | max-snapshot-age-ms |

long |

For branch type only, a positive number for the max age of snapshots to keep when expiring, including the latest snapshot. Defaults to table property history.expire.max-snapshot-age-ms. |

| optional | optional | max-ref-age-ms |